Synthetic Data Is a Dangerous Teacher

Synthetic Data Is a Dangerous Teacher

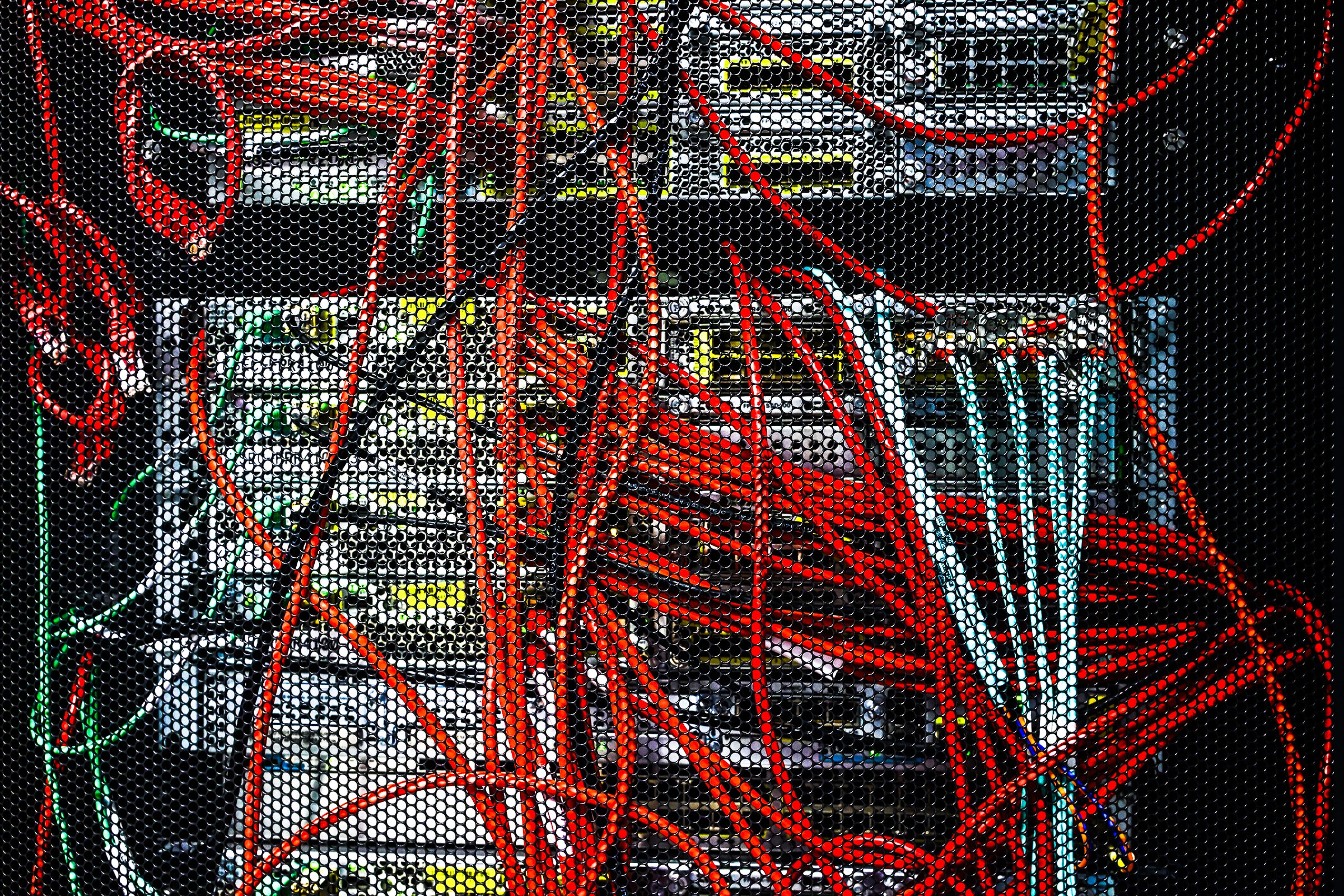

Synthetic data, or data that is artificially generated rather than collected from real-world sources, is increasingly being used in...

Synthetic Data Is a Dangerous Teacher

Synthetic data, or data that is artificially generated rather than collected from real-world sources, is increasingly being used in machine learning and AI models. While it may seem like a convenient solution for data scarcity or privacy concerns, relying too heavily on synthetic data can be dangerous.

One of the main issues with synthetic data is that it may not accurately reflect the complexities and nuances of real-world data. This can lead to biased or inaccurate results in machine learning models, as they are only as good as the data they are trained on.

Additionally, synthetic data can create a false sense of security, as models trained on synthetic data may perform well in controlled environments but fail when faced with real-world scenarios. This can have serious consequences, especially in high-stakes applications like healthcare or autonomous vehicles.

Moreover, synthetic data can also perpetuate and amplify existing biases and inequalities present in the data used to create it. If the synthetic data is not properly curated and validated, it can reinforce harmful stereotypes and discriminatory practices.

It is important for researchers and practitioners to be cautious when using synthetic data and to always validate and test their models on real-world data. While synthetic data can be a useful tool in certain situations, it should not be relied on as a substitute for genuine data collection and analysis.

In conclusion, synthetic data may seem like a quick fix, but it can be a dangerous teacher when it comes to training machine learning models. It is crucial to approach synthetic data with caution and to always prioritize the use of real-world data whenever possible.